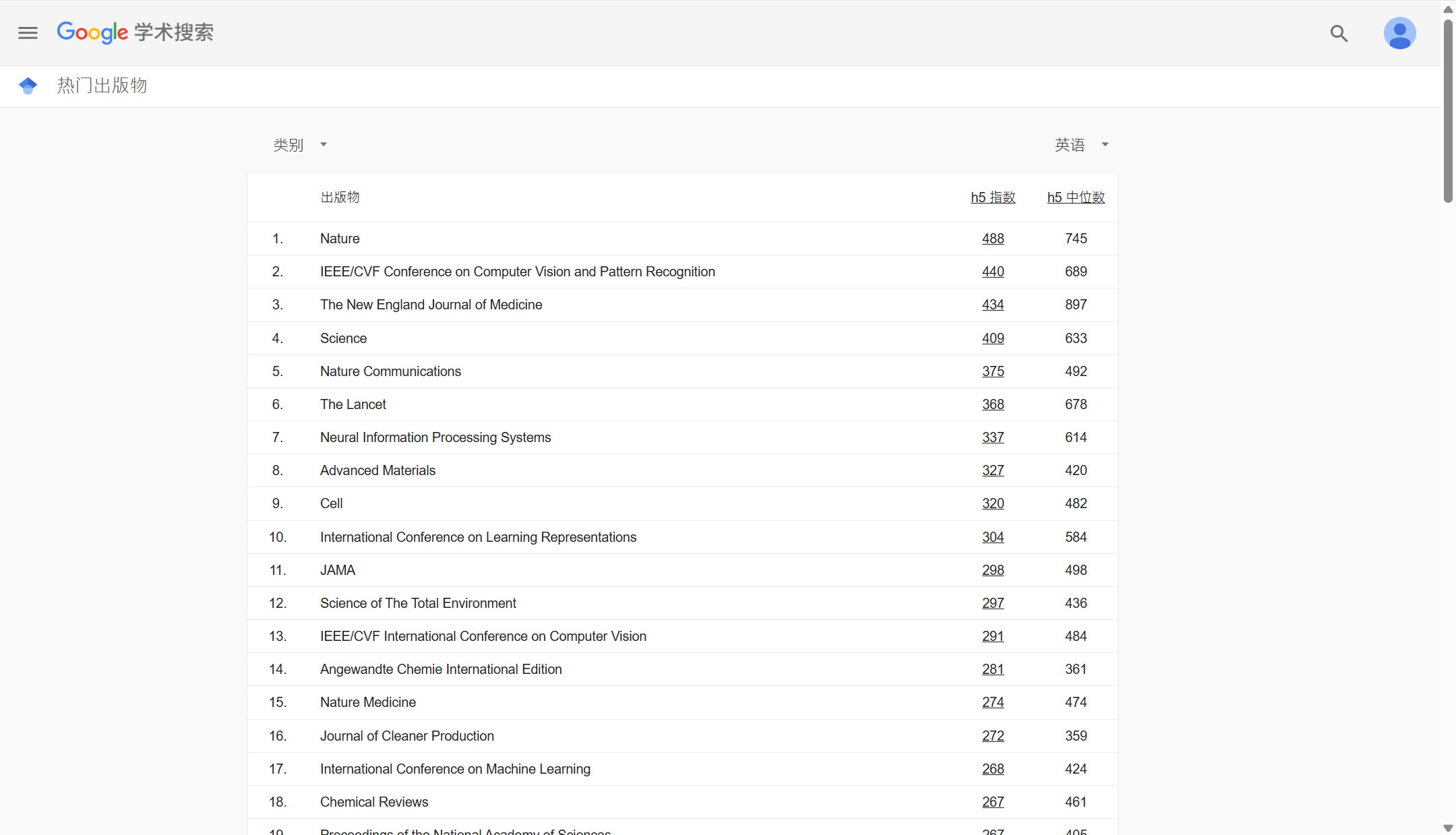

Recently, Liying Yang, a first-year Ph.D. candidate from the School of Computer Science and Engineering of the Faculty of Innovation Engineering at Macau University of Science and Technology (MUST), published a research paper entitled Not All Frame Features Are Equal: Video-to-4D Generation via Decoupling Dynamic-Static Features as the first author at the top-tier international conference International Conference on Computer Vision (ICCV), and the corresponding author of the paper is Associate Professor Yanyan Liang from the School of Computer Science and Engineering. ICCV is a premier international conference in the field of artificial intelligence and computer vision, recognized as a Category A conference by the China Computer Federation (CCF). With a Google Scholar H5-index of 291, it ranks 13th among all global publications. This publication is the fourth paper published by the University of Macau as the primary institution at ICCV, further highlighting the university's outstanding research capabilities in the field of artificial intelligence.

Liying Yang (Ph.D candidate)

ICCV conference ranking among all publications worldwide

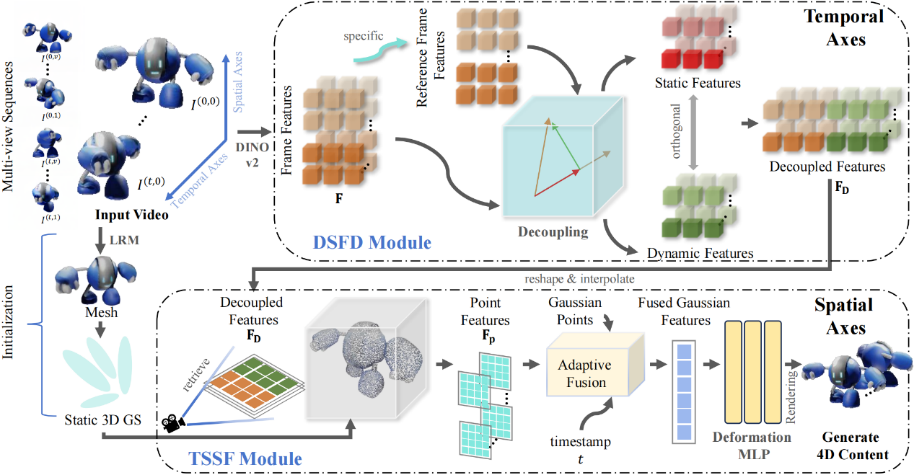

In recent years, significant progress has been made in generating dynamic 3D objects (4D) from videos. However, existing methods often struggle with scenes where dynamic and static regions are intertwined, as the larger proportion of static regions tends to overshadow dynamic information, resulting in blurred textures. To address this issue, Associate Professor Yanyan Liang and his team proposed an innovative solution—the Dynamic-Static Feature Decoupling (DSFD) module. This module analyzes the temporal axis to identify significant differences between the current frame and a reference frame, marking these regions as dynamic features while treating the rest as static features. This approach generates decoupled features driven by both dynamic features and current frame features. Additionally, the team designed a Temporal-Spatial Similarity Fusion (TSSF) module, which adaptively selects similar information for dynamic regions along the spatial axis to enhance dynamic representation and ensure accurate motion prediction. Building on these technologies, the team developed a novel method named DS4D. Experiments demonstrate that DS4D achieves state-of-the-art (SOTA) performance in video-to-4D generation, with its effectiveness validated on real-world scene datasets.

Flowchart of the overall process of the method proposed in the paper

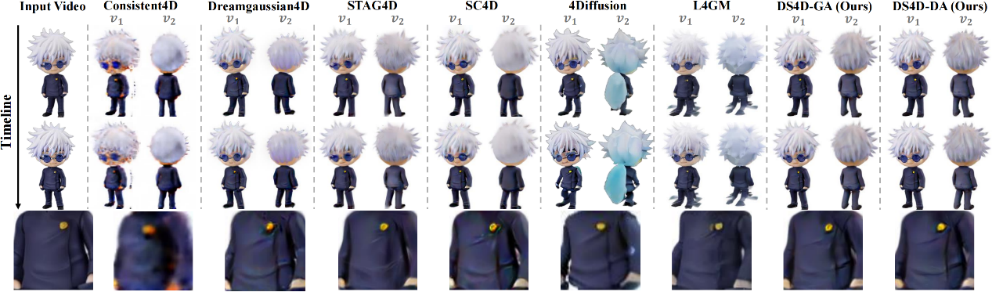

Example renderings generated in 4D

This research not only provides a new technical pathway for video-to-4D generation, but also opens up possibilities for modeling dynamic objects in complex scenes. Through theoretical innovation and experimental validation, the paper showcases MUST's leading position in the fields of computer vision and artificial intelligence. As the first author, Liying Yang 's published research also reflects MUST's outstanding achievements in cultivating high-level scientific talent.

Macau University of Science and Technology will continue to support cutting-edge scientific research, drive innovation in artificial intelligence, and contribute more wisdom and strength to global technological progress.